Students can easily access the best AI Class 10 Notes Chapter 7 Evaluation Class 10 Notes for better learning.

Class 10 AI Evaluation Notes

What is Evaluation?

Evaluation is the process of understanding the reliability of any Al model, based on outputs by feeding the test dataset into the model and comparing it with actual answers. There can be different evaluation techniques, depending on the type and purpose of the model.

Evaluation is a process that critically examines a program. It involves collecting and analysing information about a program’s activities, characteristics, and outcomes. Its purpose is to make judgments about a program, to improve its effectiveness, and/or to inform programming decisions.

So, evaluation is basically to check the performance of your AI model. This is done by mainly two things “Prediction” & “Reality”. Evaluation is done by:

- First search for some testing data with the resulting outcome that is 100 % true.

- Then you will feed that testing data to the AI model while you have the correct outcome with yourself that is termed as “Reality”.

- When you get the predicted outcome from the AI modal that is called “Prediction” compare it with the resulting outcome, which is “Reality”.

- You can do this for:

- Improving the efficiency and performance of your AI model.

- Improve it, check your mistakes.

- Importance of Evaluation:

- Evaluation helps us to check whether the model is operating correctly and optimally or not.

- Evaluation helps to understand how well it achieves its goals.

- Evaluation helps to determine works well and could be improved in a model.

- Model Evaluation Terminologies

- There are various new terminologies that come into the picture when we work on evaluating our model.

Let’s explore them with an example of the forest fire scenario.

Consider developing an Al-based prediction model and deploying it in a forest that is prone to forest fires. The model’s current goal is to make predictions about whether or not a forest fire has started. We need to consider the two circumstances of prediction and reality. The reality is the actual situation in the forest at the time of the prediction, while the prediction is the machine’s output.

![]()

Possibility

Here, we can see in the picture that a forest fire has broken out in the forest. The model predicts a Yes which means there is a forest fire. The Prediction matches with the Reality. Hence, this condition is termed as True Positive.

Case

Here there is no fire in the forest hence the reality is No. In this case, the machine too has predicted it correctly as a No. Therefore, this condition is termed as True Negative.

Possible Action

Here the reality is that there is no forest fire. But the machine has incorrectly predicted that there is a forest fire. This case is termed as False Positive.

Last case

Here, a forest fire has broken out in the forest because of which the Reality is Yes but the machine has incorrectly predicted it as a No which means the machine predicts that there is no Forest Fire.Therefore, this case becomes False Negative.

Confusion matrix

The comparison between the results of Prediction and reality is called the Confusion Matrix.

- Confusing Matrix is a record, it helps us in finding the metrics.

- Confusion matrix is not a conclusion, its a comparison of prediction and reality

The result of a comparison between the prediction and reality can be recorded in what we call the confusion matrix. The confusion matrix allows us to understand the prediction results. Note that it is not an evaluation metric but a record that can help in evaluation.

Let us once again take a look at the four conditions that we went through in the forest fire example:

True Positive

Prediction & Reality matches (True)

Prediction is True (Positive)

True Negative

Prediction & Reality matches (True)

Prediction is False (Negative)

False Positive

Prediction and Reality no not match (False)

Prediction is True (Positive)

False Negative

Prediction and Reality do not match (False)

Prediction is False (Negative)

![]()

Prediction and Reality can be easily mapped together with the help of this confusion matrix.

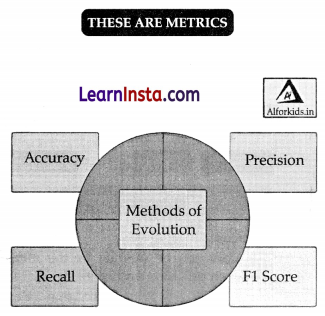

Evaluation Matrix for AI Model

Evaluation Methods: Accuracy, precision, and recall are the three primary measures used to assess the success of a classification algorithm.

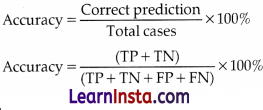

Accuracy

Accuracy allows you to count the total number of accurate predictions made by a model. The accuracy calculation is as follows: How many of the model predictions were accurate will be determined by accuracy. True Positives and True Negatives are what accuracy considers.

Here, total observations cover all the possible cases of prediction that can be True Positive (TP), True Negative (TN), False Positive (FP) and False Negative (FN).

Precision

Precision is defined as the percentage of true positive cases versus all the cases where the prediction is true. That is, it takes into account the True Positives and False Positives.

Recall

It can be described as the percentage of positively detected cases that are positive. The scenarios where a fire actually existed in reality but was either correctly or incorrectly recognised by the machine are heavily considered. That is, it takes into account both False Negatives (there was a forest fire but the model didn’t predict it) and True Positives (there was a forest fire in reality and the model anticipated a forest fire).

Which Metric is Important?

Depending on the situation the model has been deployed, choosing between Precision and Recall is necessary. A False Negative can cost us a lot of money and put us in danger in a situation like a forest fire. Imagine there is no need for a warning, even in the case of a forest fire. The entire forest might catch fire.

Viral Outbreak is another situation in which a False Negative might be harmful. Consider a scenario in which a fatal virus has begun to spread but is not being detected by the model used to forecast viral outbreaks. The virus may infect numerous people and spread widely.

![]()

Consider a model that can determine whether a mail is spam or not. People would not read the letter if the model consistently predicted that it was spam, which could lead to the eventual loss of crucial information.

The cost of a False Positive condition in this case (predicting that a message is spam when it is not) would be high.

F1 Score :

F1 score can be defined as the measure of balance between precision and recall.

F1 Score = 2 × \(\frac{\text { Precision } \times \text { Recall }}{\text { Precision }+ \text { Recall }}\)

- Take a look at the formula and think of when can we get a perfect Fl score.

- An ideal situation would be when we have a value of 1 (that is 100 %) for both Precision and Recall. In that case, the F1 score would also be an ideal 1(100 %).

- It is known as the perfect value for F1 Score. As the values of both Precision and Recall range from 0 to 1, the F1 score also ranges from 0 to 1.

Let us explore the variations we can have in the F1 Score:

| Precision | Recall | F1 Score |

| Low | Low | Low |

| Low | High | Low |

| High | Low | Low |

| High | High | High |

In conclusion, we can say that a model has good performance if the F1 Score for that model is high.

![]()

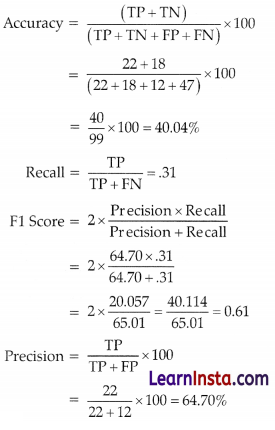

Case Study:

| The Confusion Matrix | Reality:1 | Reality:0 |

| Prediction:1 | 22 | 12 |

| Prediction:0 | 47 | 18 |

Calculate Accuracy, Precision, recall and F1 Score for the above problem.

| TP | FP |

| 22 | 12 |

| 47 | 18 |

Based on the given Confusion matrix calculate the Accuracy, Precision, Recall and F1 Score.’